If, like most of us, you’ve been keeping a close eye on the developments of NASA’s latest space mission, you may have been intrigued by the ground-breaking technology developed especially for this project.

At this very moment in March of 2021, NASA’s little rover, Perseverance, is using an agglomeration of cameras and sensors to navigate its way around Mars. It’s truly fascinating to think that some of the most advanced technology ever made on Earth, isn’t even on Earth. So what else have we sent up to space? And when did it begin?

The first camera ever sent up to space was a high-end, Swedish camera called a Hasselblad. In 1962 it was chosen by project astronaut Walter Schirra after a recommendation by photographers at National Geographic and it retailed at around $500 at the time (over $4k in today’s money). In order for the Hasselblad camera to become suitable for outer space, several adjustments had to be made to reduce the weight. For example, the leather covering, auxiliary shutter, reflex mirror and viewfinder were all removed. The film magazine held enough film for 70 frames, a considerable increase from the usual 12. The camera was then painted matte black to minimise reflections from the window of the orbiter. Additionally, the waist-level viewfinder was replaced by a simple, custom side-finder that could be aimed while wearing a helmet and visor. This space-ready version of the Hasselblad first accompanied Walter Schirra and the other astronauts on Mercury 8 in October 1962, the 5th US space mission.

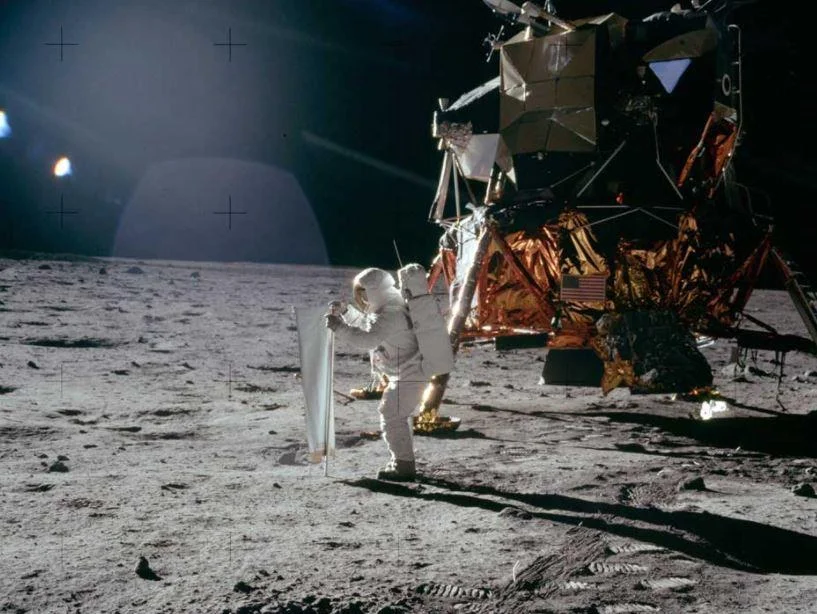

Astronaut Walter Schirra checks out his spacecraft’s camera equipment (photo © NASA)

By the time of the iconic Apollo 11 mission by Neil Armstrong, Buzz Aldrin and Michael Collins, Hasselblad and NASA were in full collaboration. In fact, the cameras that captured the first images of the moon in 1969 were the Hasselblad Data Camera (HDC) with a Zeiss Biogon 60mmf/5.6 lens and a 7mm film magazine, and the Hasselblad Electric Camera (HEC) with a Zeiss Planar 80mm f/2.8 lens. All images were shot with the camera attached to Armstrong’s chest because the data camera lacked a conventional viewfinder. The astronauts were even trained on Earth to learn how to aim the camera from chest-level where it had been attached to the spacesuit. Some pretty innovative ideas.

Not so innovative?

The beginnings of the man-made rubbish heap left on the moon following their visit. After the film magazines were removed from the cameras, the astronauts had to leave the cameras and lenses behind because the weight restrictions to be able to return back down to earth were so strict. To this day there are 12 camera bodies and lenses discarded on the surface of the moon. Is it a high price to pay for some of the most iconic photos in history?

Of course, one solution to the technology waste is to go digital. Digital photography was actually developed by an engineer at NASA in the 1960s. Eugene F. Lally worked in the Jet Propulsion Laboratory at NASA and his idea was to take pictures of the planets and stars while travelling through space, in order to help establish the astronauts’ position. His proposal detailed an array of electro-optical sensors connected to an on-board computer that would provide autonomous navigation by tracking the relative movement of stars and planetary occultations during space flight and planetary landings. This was his first presentation of the concept of digital photography and digital camera design. Whilst a lot of spacecraft navigation systems were based on his design, it wasn’t until 1975 that the first ever digital camera was built, by Eastman Kodak.

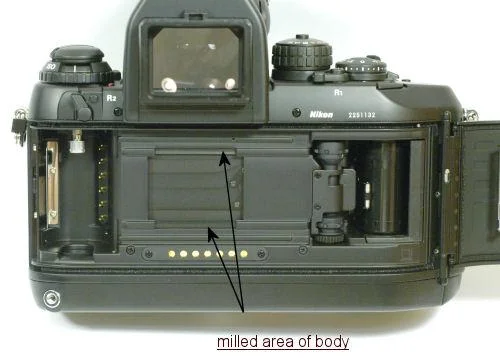

In this same decade, the CCD (charge-coupled device) containing an array of linked capacitors was invented. A couple of decades later, it had made its way into digital imaging. Its image sensor converted light into electrical charges, which worked as if to replace film inside traditional cameras. Following the trend, NASA began to send CCD cameras into space and one of the first examples of the earliest digital cameras sent up to space was the Nikon NASA F4 Electronic Still Camera. Nikon provided a modified Nikon F4 body, and NASA designed the electronics for the digital camera. It was first flown in September 1991 on board the Space Shuttle Discovery for mission STS-48.

The aim was to evaluate the performance of the Electronic Still Camera through a series of tests throughout the mission. It was used to take photographs of areas of interest such as images of Earth, major cities and geological formations. Tests were made to analyse and compare the camera’s use for earth-facing images, documentation and support to missions, and its photogrammetric capabilities. Simultaneously, ground-based tasks were carried out to demonstrate the advantages of a digital system, such as providing hard-copy prints of the downlinked images during the mission, to be processed and stored on disks for transfer to the laboratory.

Fast-forward to 2020, where the Mars 2020 Space Mission (part of NASA’s Mars Exploration Programme) is using the rover Perseverance and imaging technology has moved on again. It launched from Earth on the 30th of July 2020, and confirmed landing on Mars on the 18th of February 2021. The aim of Perseverance is to investigate any signs of life on Mars and assess the possibility of future habitability. So how is it doing this? Perseverance has a combination of 19 CMOS and CCD cameras and 2 microphones. Several of these cameras are focused on engineering and science tasks. The engineering cameras helped them land on Mars, with a set of six hazard avoidance cameras serving as their “eyes” on the surface of Mars. They allow the rover to move almost entirely autonomously.

For that “touchdown” on Mars, the engineering team added specific cameras and a microphone to document the entry, descent and landing in great detail. This collection of cameras and engineering technology gave the public a front-row seat to a Mars landing for the first time in history. They recorded stunning views of the landing in full colour during what was described as “the seven minutes of terror”, a complicated set of entry, descent and landing procedures. This involves parachute “up look” cameras, descent-stage “down look” cameras, rover “ up look” cameras, and rover “down look” cameras. Many of these cameras use detectors, and one of the detectors used on the LCAM (Lander Vision System Camera) which is mounted to the bottom of the rover and pointing downward, is an ON Semi Python 5000.

The Martian PYTHON 5000 is a 1 inch 5.3 megapixel CMOS image sensor with a pixel array of 2592 by 2048 pixels. It’s large sensor with 4.8 µm x 4.8 µm pixels is billed as a low noise and high sensitivity sensor, ideal for the variable light application that is likely to exist on the surface of Mars.

We can supply this sensor in our HIK Robot Cameras. The MV-CA050-20GN is a medium/high resolution industrial camera for factory automation and robot vision applications. An added feature of this camera is the superior sensitivity in the near infrared bandwidth. There are distinct benefits (and some disadvantages) in using NIR cameras and light sources in factory automation – but that’s for another blog story.